Home Lab

Architecture and Hardware Refresh

I get asked about my homelab quite a bit, so this post details the recent architecture and hardware changes to my homelab. As with most labs, this one is an ever-changing beast… not to mention great way to spend that hard earned cash! In this revision you will see a major shift in the way VCF, NSX, SD-WAN, AWS is used.

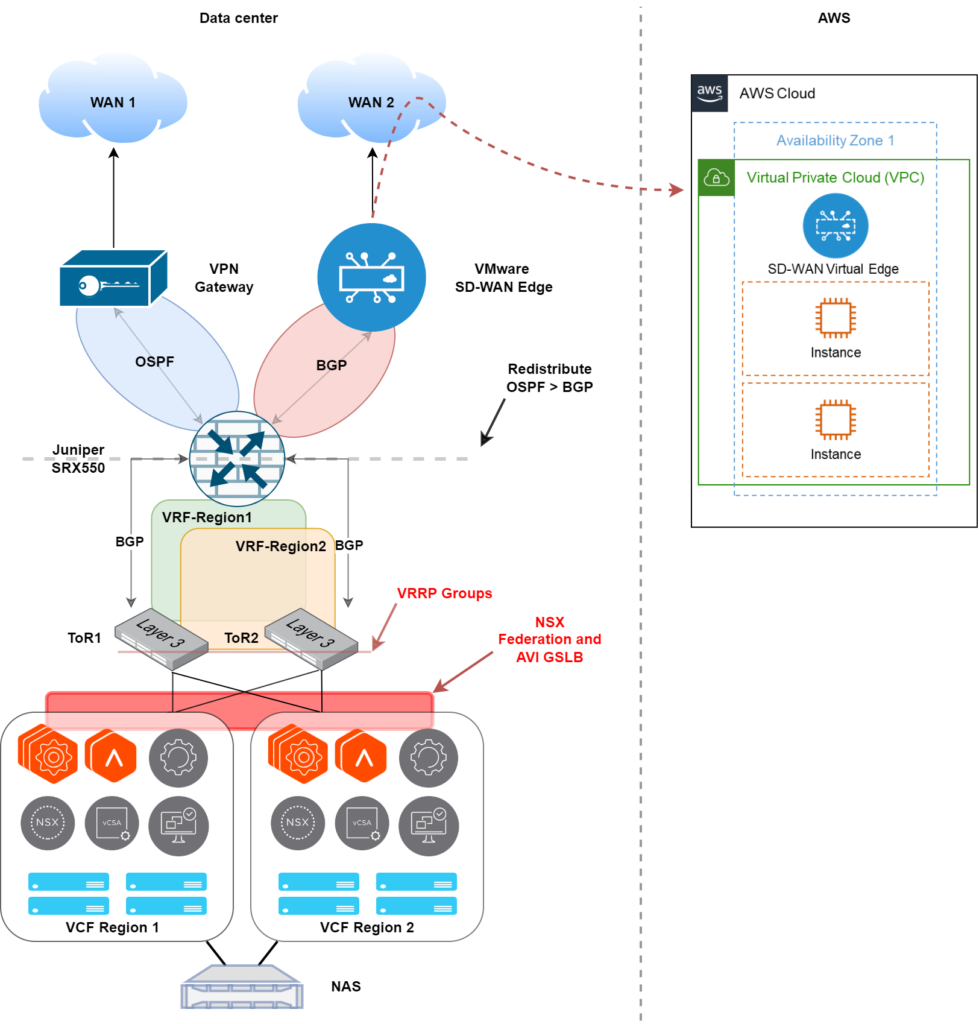

Lab Architecture

The diagram below is a representation of the labs current architecture, the last lab revision included a lot of nested routers. Recently, I scrapped all the virtualized routers and replaced them with physical top of rac

Summary of the lab (top down).

- Two WAN connections, one is a dedicated backup link and also used for remote access. The other is the primary link, connectivity is provided with VMware SD-WAN.

- The core router / firewall is a Juniper SRX550. This peers with both the VPN gateway and the SD-WAN edge, using OSPF and BGP, respectively.

- The SRX550 has two VRF’s, one for each VCF region and links and routing protocols are configured accordingly.

- The top of rack switches are Brocade ICX6610 48P’s, also configured with the VRFs, interfaces, BGP, and VRRP for gateway redundancy.

- Two physical VCF Regions (Bill of Materials is below). With the VRF’s, I have simluated two datacenters and have configured connectivity with NSX Federation, and NSX-ALB Global Server Loadbalancing (GSLB)

- There is shared storage between the two VCF Regions, this is provided with a NAS.

- The connection from the Data Center to AWS is provided with VMware SD-WAN, this is configured in a branch-hub topology.

- The NAS is used as the primary backup target, critical data is also replicated to AWS S3 using Veeam (backup architecture detailed later).

Bill of Materials

VCF Region 1

- Fujitsu Primergy RX2540 M1 E5-2640v3 x2 / 256GB RAM / 3TB Flash Storage X2

- Dell R730 E5-2360v3 x2 / 256GB RAM / 3TB Flash Storage

- Dell R720 E5-2620 v2 / 256GB RAM / 3TB Flash Storage

VCF Region 2

- Dell R730XD E5-2640 v3 / 256GB RAM / 3TB Flash Storage

- Dell R630 E5-2630 v3 / 256GB RAM / 3TB Flash Storage X2

- Dell R630 E5-2697 v3 / 256GB RAM / 3TB Flash Storage

Networking

- Sophos XG 430 – VPN Gateway

- VMware SD-WAN Edge 510

- Juniper SRX550 – Firewall / Core Router

- Brocade ICX 6610 48P – top of rack switching

- Host uplinks to switching is all 10GB

Shared Storage

This is a BareMetal install of TrueNAS.

- 6 x 6TB WD reds

- SSD Caching drive

- 10GB networking

Virtual Environment

Each region has the following deployed.

- SDDC Manager

- vCenter

- VMware NSX (Local Managers)

- VMware NSX (Global Managers

- NSX Advanced Load Balancer (NSX ALB) Controllers

- NSX ALB Service Engines

- vRealize – Log Inisght, Network Insight, Operations Manager

- vRealize Automation (Region 1 only)

- vRealize Lifecycle Manager (Region 1 only)

- Infoblox (Region 1 only)

- Microsoft AD (Region 1 only)

- Veeam (Region 1 only)

If I ever need to test something that is not going to be permanent, I use nested environments in either region. This ensure I do not disrupt the underlaying infrastructure.

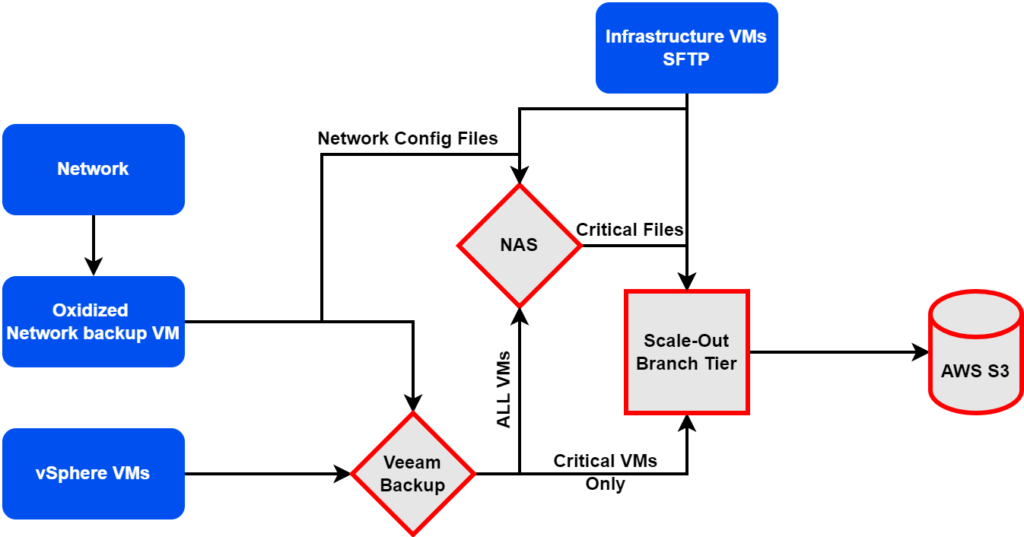

Backup Strategy

The diagram below depicts the backup workflow configured in the lab.

- Network devices (switches, routers, etc) have their configuration pulled by Oxidized. A cron job on Oxidized runs at a scheduled interval that copies the files to a file share on the NAS.

- The Oxidized VM is backed up by Veeam, this backup is stored on the NAS

- vSphere VMs are backed up by Veeam and are stored on the NAS. Critical VMs (AD etc) are also marked for secondary storage, these are replicated to AWS S3.

- Other critical files, such as those on the file share, are also marked for secondary storage, and replicated to AWS S3.

- Infrastructure VMs (vCenter, NSX, SDDC Manager, etc) are backed up using SFTP to the scale-out branch tier, as well as the file share on the NAS.

All the content I publish is created utilizing this lab.